Exploring GPT-4 and Its Context Length

This blog post examines GPT-4 and its context length. It looks at how GPT-4 generally lacks knowledge of events after September 2021 and does not learn from its experience. It also looks at the 8,192 token context length and the limited access to the 32,768 context version.

Simon Willison

Creator @datasetteproj, co-creator Django. PSF board. @nichemuseums. Hangs out with @natbat + @cleopaws. He/Him. Mastodon: https://t.co/t0Mrmo0Z2K

-

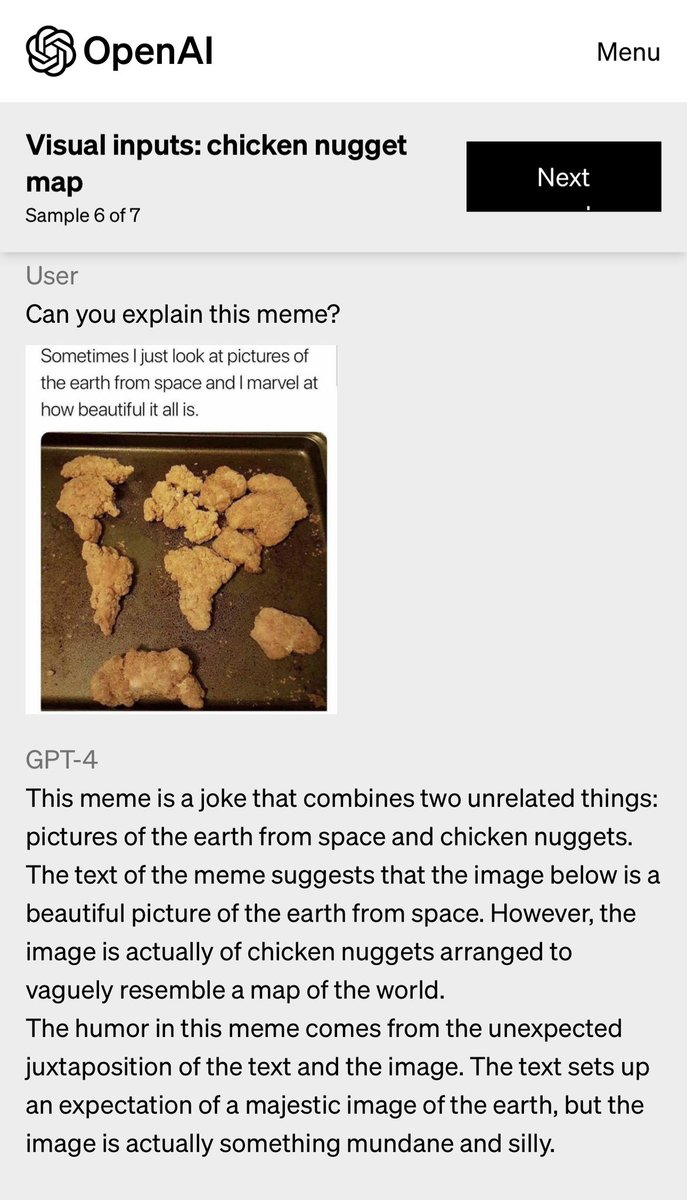

Multimodal! https://t.co/3ehD6N8MlP

— Simon Willison (@simonw) March 14, 2023 -

Cannot wait to try this out pic.twitter.com/hxzZUvkqrR

— Simon Willison (@simonw) March 14, 2023 -

"GPT-4 generally lacks knowledge of events that have occurred after the vast majority of its data cuts off (September 2021), and does not learn from its experience."

— Simon Willison (@simonw) March 14, 2023

Surprising that it has the same knowledge cut-off as GPT-3 - was it trained on the same data? -

"gpt-4 has a context length of 8,192 tokens. We are also providing limited access to our 32,768–context (about 50 pages of text) version, gpt-4-32k"

— Simon Willison (@simonw) March 14, 2023

That's what I was most hoping for: enables things like summarization of full academic papers, rather than having to split them -

Bing was GPT-4 all along! https://t.co/agZI4ShxTW

— Simon Willison (@simonw) March 14, 2023 -

The "GPT-4 Technical Report" is a 98 page PDF which has a whole bunch of dense additional information that may not have been reported widely yet https://t.co/f3hT2zdEYb

— Simon Willison (@simonw) March 14, 2023