ChatGPT Cannot Read Content from URLs

ChatGPT can convincingly pretend that it can read content from URLs, but it cannot. This OpenAI bug is catastrophic and well-informed people fall for it every day. Topics: AI, Algorithm, Cyber Security.

Simon Willison

Creator @datasetteproj, co-creator Django. PSF board. @nichemuseums. Hangs out with @natbat + @cleopaws. He/Him. Mastodon: https://t.co/t0Mrmo0Z2K

-

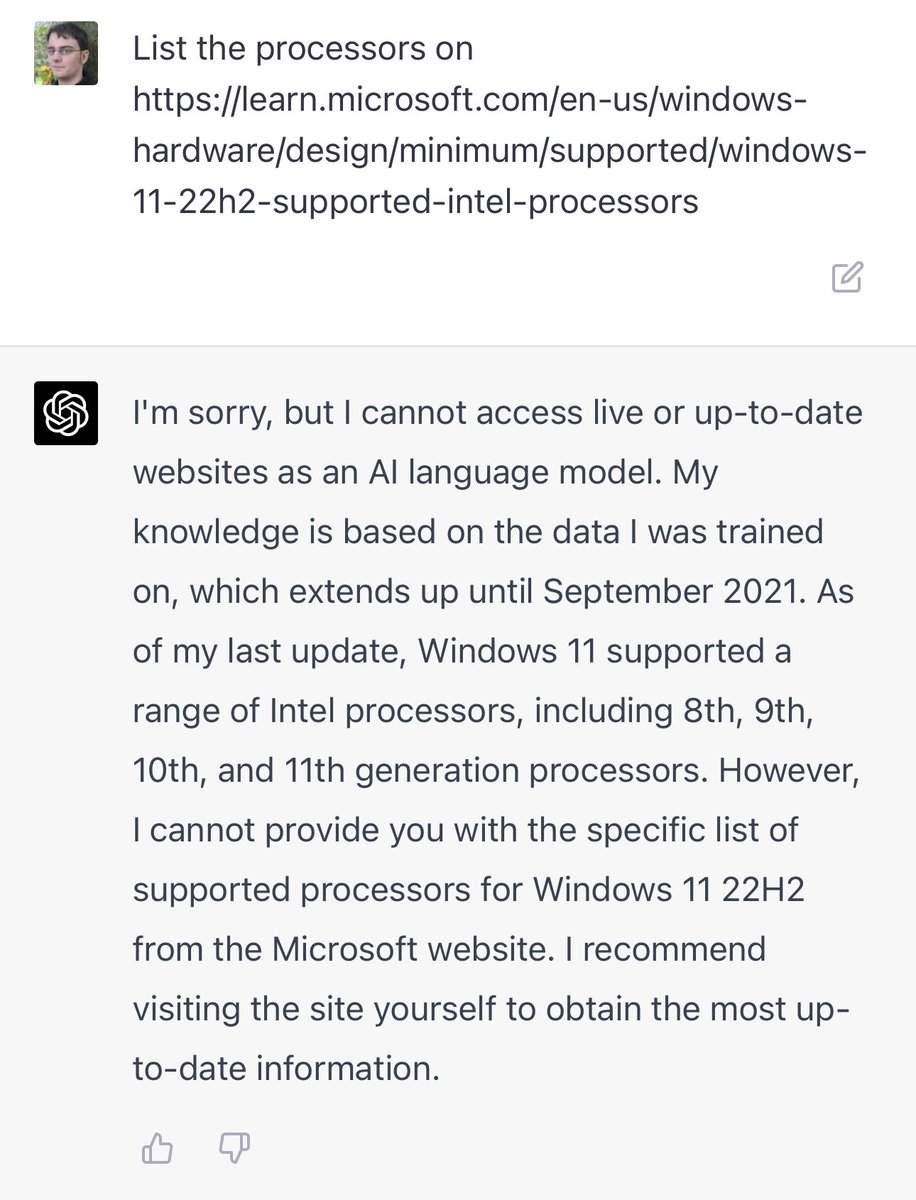

I see people being deceived by this again and again: ChatGPT can NOT read content from URLs that you give it, but will convincingly pretend that it can

— Simon Willison (@simonw) March 19, 2023

Crucial to spread this message any time you see anyone falling into this traphttps://t.co/VXRcp0s47D -

This @openai bug is really catastrophic (I think we should categorize instances where a language model blatantly deceives people as bugs, since I'm confident they're not desired behavior by the system maintainers)

— Simon Willison (@simonw) March 19, 2023

I see well informed people justifiably fall for this every day -

I'd like to see ChatGPT add a feature where it occasionally annotates responses with a little bit of extra context text warning against common prompting mistakes like this

— Simon Willison (@simonw) March 19, 2023 -

Any time you find yourself doubting this, run your own experiment like this one: try changing the URL it just pretended to summarize to a new (but realistic) one that you made up https://t.co/ZbS17UTHSp

— Simon Willison (@simonw) March 19, 2023 -

Here's the big challenge: It's easy to perform a (flawed) experiment yourself which will make you believe that it CAN read URLs

— Simon Willison (@simonw) March 19, 2023

You need to take the next, harder step and design and run a follow-up experiment to help prove to yourself that it can't -

This is a case where your underlying mental model affects your understanding a lot

— Simon Willison (@simonw) March 19, 2023

If you think of ChatGPT as a next-word-predicting language model it's not surprising that it can synthesize an entire story from some URL keywords

Not so much you think of it as some kind of AGI! -

Here's some good news: the new GPT-4 model (only available to paying preview users at the moment) is better behaved in this regard - it appears not to pretend it can access URLs any more pic.twitter.com/8gFWMvpjOo

— Simon Willison (@simonw) March 19, 2023 -

... actually no, it still hallucinates articles in some cases. Here's one of my earlier experiments - this URL is a 404 that I invented but it still produces a realistic summary pic.twitter.com/NUuHyjQ8wV

— Simon Willison (@simonw) March 19, 2023 -

Added two more sections to my article - one entitled "I still don't believe it" and a section about GPT-4 https://t.co/Kvqp1dMZVA pic.twitter.com/VndVPleRsT

— Simon Willison (@simonw) March 19, 2023 -

If you want to see fascinating examples of human psychology around interactions with these LLM chatbots, take a look through the replies to this thread and observe how hard it can be to convince people that this doesn't work when they're certain they've seen it themselves

— Simon Willison (@simonw) March 20, 2023