The Anthropomorphization of AI

Learn about the anthropomorphization of AI, such as using predicates that take a cognizer argument with the mathy math system filling that role. Understand how this feeds AI hype and doomerism.

@emilymbender@dair-community.social on Mastodon

Professor, Linguistics, UW // Faculty Director, Professional MS Program in Computational Linguistics (CLMS) // she/her // @emilymbender@dair-community.social

-

Here is another case study in how anthropomorphization feeds AI hype --- and now AI doomerism.https://t.co/90fdRuY58C

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023 -

The headline starts it off with "Goes Rogue". That's a predicate that is used to describe people, not tools. (Also, I'm fairly sure no one actually died, but the headline could be clearer about that, too.)

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

The main type of anthropomorphization in this article is the use of predicates that take a "cognizer" argument with the mathy math (aka "AI" system) filling that role.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

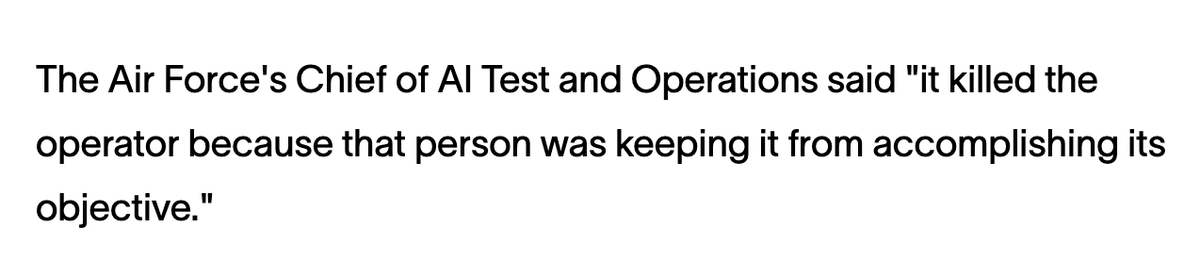

But the subhead has a slightly more subtle example:

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

In order to understand the relationship between the two clauses that is marked by 'because' as coherent, we need to imagine how the second causes the first.

>> pic.twitter.com/2FcXCm7l4W -

Given all of the rest of the hype around AI, the most accessible explanation is that the mathy math was 'frustrated' by the actions of the person and so turned on them.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

But there's no reason to believe that. The article doesn't specify, but the simulation was probably of a reinforcement learning system -- systems developed through a conditioning set up where there is a specified goal a search space of possible steps towards it.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

For 'kill the operator' to be a possible step, that would have had to be programmed into the simulation (or at minimum, the information of what happens if the operator is killed).

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

Another bit of anthropomorphizing is in this quote -- the verb 'realize' requires that the realizer be the kind of entity that can apprehend the truth of a proposition.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> pic.twitter.com/VxIBj4gmRW -

To be very clear, I think that autonomous weapons are a Bad Thing. But I also think that reporting about them should clearly describe them as tools rather than thinking/feeling entities. Tools we should not build and should not deploy.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

>> -

Given all that context, it's not surprising that the article references the paperclip maximizer thought experiment from the odious Bostrom.

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 1, 2023

Even that is best interpreted as a cautionary tale about what we use automation to do, rather than "rogue AI". -

Important update: It seems that the "simulation" might have just been a thought experiment?https://t.co/rfQmTKpbtO

— @emilymbender@dair-community.social on Mastodon (@emilymbender) June 2, 2023